🌈 I am Xuyang Liu (刘旭洋), a third-year Master’s student at Sichuan University  . I am also working as a research intern at OPPO Research Institute

. I am also working as a research intern at OPPO Research Institute  , supervised by Prof. Lei Zhang (PolyU, IEEE Fellow). Previously, I have interned at Ant Group

, supervised by Prof. Lei Zhang (PolyU, IEEE Fellow). Previously, I have interned at Ant Group  focusing on GUI Agent, and Taobao & Tmall Group

focusing on GUI Agent, and Taobao & Tmall Group  working on Efficient VLMs. I’ve also spent half a year visiting MiLAB at Westlake University, supervised by Prof. Donglin Wang. I am fortunate to work closely with Dr. Siteng Huang from DAMO Academy and Prof. Linfeng Zhang from SJTU.

working on Efficient VLMs. I’ve also spent half a year visiting MiLAB at Westlake University, supervised by Prof. Donglin Wang. I am fortunate to work closely with Dr. Siteng Huang from DAMO Academy and Prof. Linfeng Zhang from SJTU.

📌 My research centers on efficient Large Vision-Language Models (LVLMs), including:

- 🖼️ Image-Text LVLMs: high-resolution understanding via context compression and fast decoding, including GlobalCom2[AAAI’26], V2Drop[CVPR’26], FiCoCo[AAAI’26], and MixKV[ICLR’26].

- 🎬 Video Understanding: long/audio-video, and streaming reasoning via efficient encoding and compression, including VidCom2[EMNLP’25], STC[CVPR’26], and OmniSIFT.

- ⚙️ Efficiency Toolbox: efficient transfer/fine-tuning and benchmarking for downstream task adaptation, including M2IST[TCSVT’25], V-PETL[NeurIPS’24] and AutoGnothi[ICLR’25].

📢 If you find these directions interesting, feel free to reach out via email: liuxuyang@stu.scu.edu.cn.

🔥 News

- 2026.02.21 🎊🎊 Three papers have been accepted by CVPR 2026, including token compression for VLMs via V2Drop, efficient streaming video understanding via STC, and token compression for autonomous driving via Prune2Drive!

- 2026.01.26 🎊🎊 Two papers have been accepted by ICLR 2026, including fast decoding for VLM/LLM via MixKV and the first safety study of dLLMs DIJA! Congratulations to all collaborators!

- 2025.11.08 🎊🎊 Three papers have been accepted by AAAI 2026, including two LVLM acceleration methods GlobalCom2 and FiCoCo, and a RL-based GUI grounding training framework GUI-G2!

- 2025.08.21 🎊🎊 One first author paper VidCom2 about plug-and-play inference acceleration for VideoLLMs has been accepted by EMNLP 2025 main conference! Code is available!

- 2025.05.27 🙌🙌 We release a new paper, pointing to shifting AI efficiency from model-centric to data-centric compression. Project is available! Our paper is honored to be the #2 Paper of the day!

- 2025.03.11 🎊🎊 One first author paper (M2IST) about parameter-, memory-, and time-efficient fine-tuning for referring expression comprehension has been accepted by IEEE Transactions on Circuits and Systems for Video Technology (TCSVT)!

- 2025.02.22 🎊🎊 Two papers (ToCa and AutoGnothi) have been accepted by ICLR 2025! Congratulations to all collaborators!

- 2024.09.26 🎊🎊 One co-first author paper (V-PETL) about unified visual parameter-efficient transfer learning benchmark has been accepted by NeurIPS 2024!

📝 Publications

Full publications are on my Google Scholar profile. *: Equal contribution. †: Project leader.

🚩 Highlight: ICLR: 4, NeurIPS: 1, CVPR: 3, AAAI: 3, EMNLP: 1.

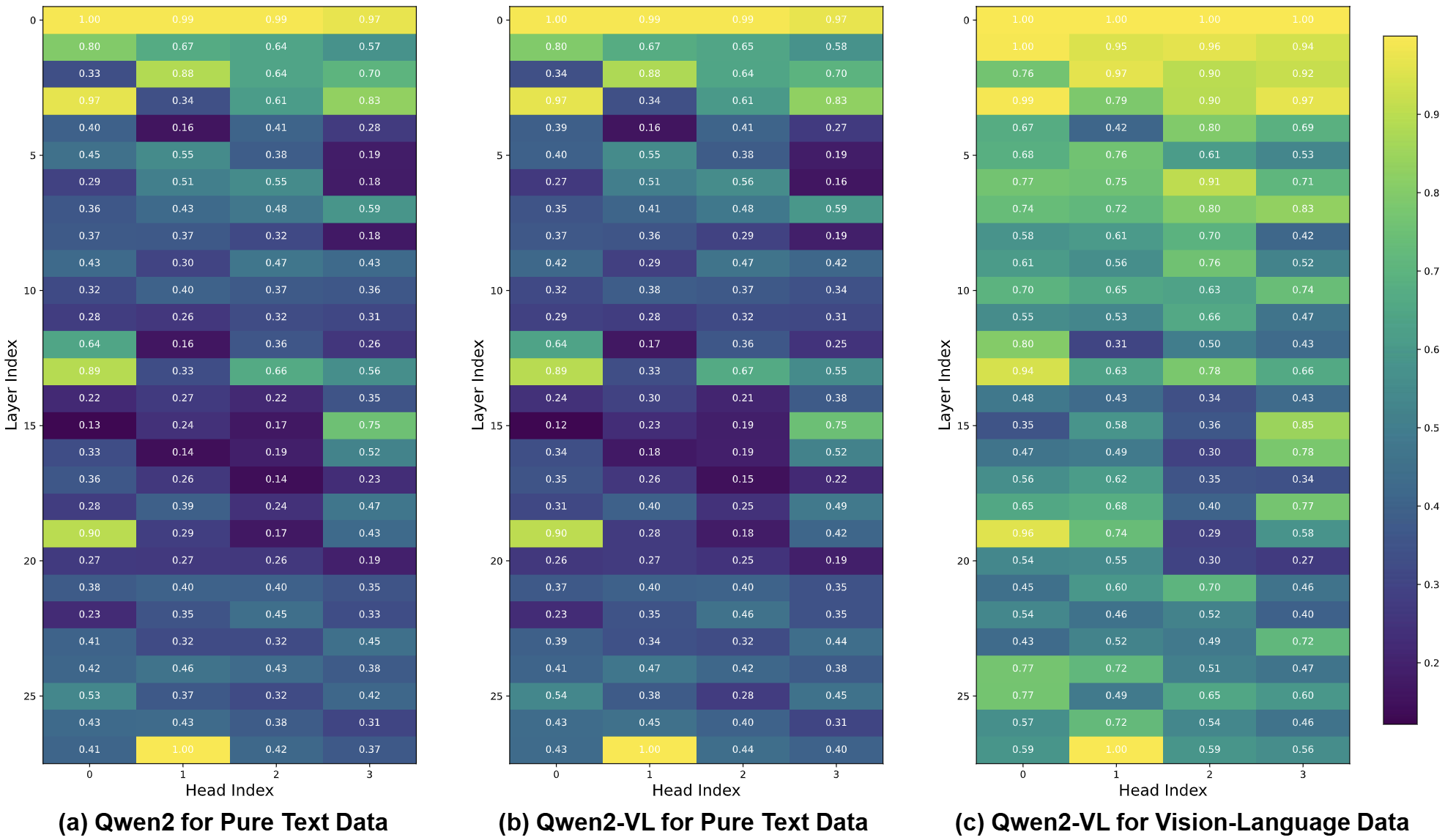

Xuyang Liu*, Xiyan Gui*, Yuchao Zhang, Linfeng Zhang

- Works with diverse LVLMs and LLMs, including LLaVA, Qwen-VL, InternVL, Llama, and Mistral series.

- Integrates with existing compressors (e.g., SnapKV, AdaKV, SparseMM) and consistently improves their performance.

- Delivers accuracy gains (average of +5.1% across 5 tasks) without sacrificing speed or memory efficiency.

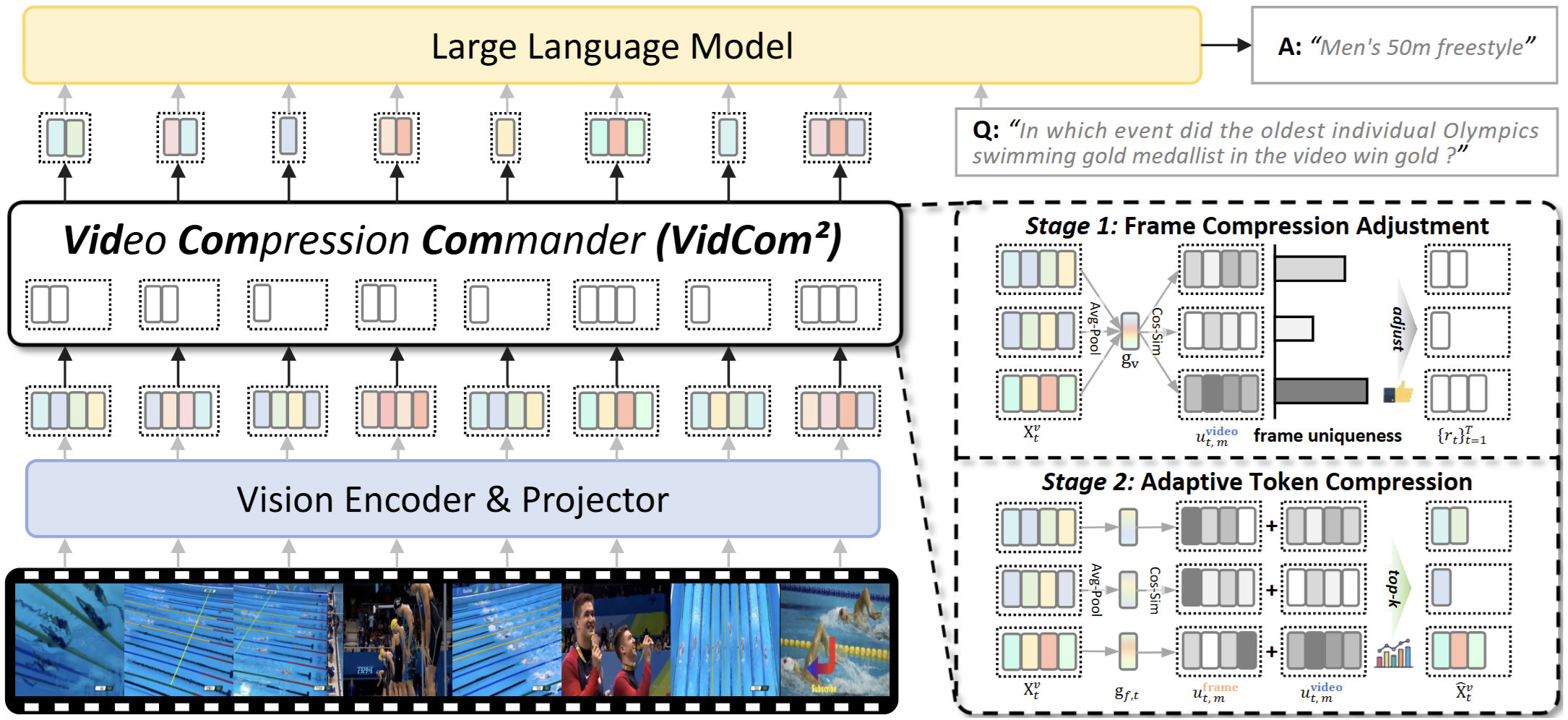

Video Compression Commander: Plug-and-Play Inference Acceleration for Video Large Language Models

Xuyang Liu*, Yiyu Wang*, Junpeng Ma, Linfeng Zhang

- Compatible with major VideoLLMs and OmniLLMs, including LLaVA, Qwen-VL, and Qwen-Omni series.

- Uses only 25% of visual tokens while preserving 99.6% performance of LLaVA-OV.

- Reduces LLaVA-OV LLM generation time by 70.8% and overall latency by 43.0%.

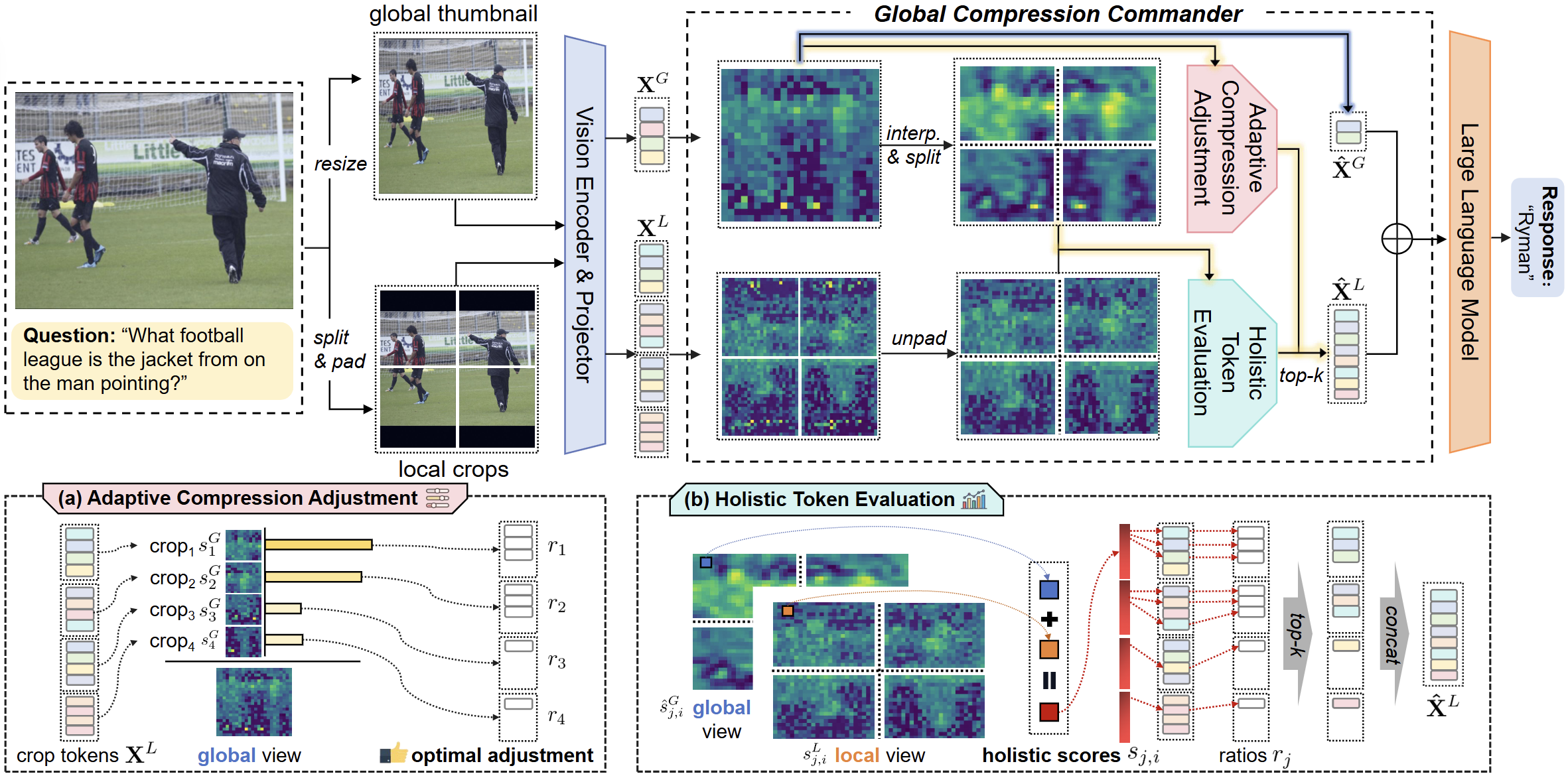

Xuyang Liu, Ziming Wang, Junjie Chen, Yuhang Han, Yingyao Wang, Jiale Yuan, Jun Song, Siteng Huang, Honggang Chen

- Compatible with major HR-LVLMs, including LLaVA-NeXT and LLaVA-OV.

- Uses only 10% of visual tokens while maintaining above 90% performance across 10 tasks.

- Reduces FLOPs and peak memory to 9.1% and 60%, and achieves 1.8x throughput.

Conference Papers

Journal Papers

Preprints & Under Submission

🤗 Resources

Please find my full repositories on my GitHub profile.

- Awesome Generation Acceleration

- Duty: Owner.

- Description: An open-source repository that curates a collection of recent awesome papers on AIGC acceleration.

- Awesome Token-level Model Compression

- Duty: Owner.

- Description: An open-source repository that curates a collection of recent awesome papers on token-level model compression.

💻 Experiences

Internships

- Research Intern - OPPO Research Institute, OPPO, Shenzhen

- Time: Jul 2025 - Present.

- Thesis: Video Understanding with Large Vision-Language Models.

- Supervisor: Prof. Lei Zhang.

- Research Intern - Ant Security Lab, Ant Group, Hangzhou

- Time: Apr 2025 - Jul 2025.

- Thesis: Multi-modal Graphical User Interface (GUI) Agents.

- Research Intern - Taobao & Tmall Group, Alibaba Group, Beijing

- Time: Jul 2024 - Mar 2025.

- Thesis: Efficient Multi-modal Large Language Models.

Visiting

- Research Assistant - EPIC Lab, Shanghai Jiao Tong University, Remote

- Time: June 2024 - Present.

- Thesis: Efficient Multi-modal Large Language Models.

- Supervisor: Prof. Linfeng Zhang.

- Visiting Student - MiLab, Westlake University, Hangzhou

- Time: Mar 2023 - Sep 2023.

- Thesis: Efficient Transfer of Vision-language Models.

- Supervisors: Dr. Siteng Huang and Prof. Donglin Wang.

🎤 Talks

- 2025.06.10: PolyU NLP Group directed by Prof. Wenjie Li: Shifting AI Efficiency From Model-Centric to Data-Centric Compression. [slides]

📠 Services

Conference Reviewer

- International Conference on Learning Representations (ICLR)

- International Conference on Machine Learning (ICML)

- Advances in Neural Information Processing Systems (NeurIPS)

- AAAI Conference on Artificial Intelligence (AAAI)

- ACM International Conference on Multimedia (MM)